Improve uptime & lower costs with efficient rack cooling solutions for server rooms and data centers.

Cooling infrastructure is a significant part of a data center. The complex connection of chillers, compressors and air handlers create the optimal computing environment, ensuring the longevity of the servers, and the vitality of the organization they support.

Yet, the current data center cooling ecosystem has come at a price. The EPA’s oft-cited 2007 report predicted that data center energy consumption, if left unchecked, would reach 100 billion kWh by 2011 with a corresponding energy bill of $7.4 billion. This conclusion, however, isn’t strictly based on Moore’s Law or the need for greater bandwidth. Their estimate envisions tomorrow’s processing power will be addressed with yesterday’s cooling strategies. The shortcomings of these designs, coupled with demand for more processing power, would require (10) new power plants to provide the juice for it all, according to that report.

According to a more recent study commissioned by the NY Times from Jonathan Koomey Ph.D. Stanford entitled, Growth in Data center electricity use 2005 to 2010, “the rapid rates of growth in data center electricity use that prevailed from 2000 to 2005 slowed significantly from 2005 to 2010, yielding total electricity use by data centers in 2010 of about 1.3% of all electricity use for the world, and 2% of all electricity use for the US.” Assuming the base line figures are correct, Koomey states that instead of doubling as predicted by the EPA study, energy consumption by data centers increased by 56% worldwide and only 36% in the US.

According to Koomey, the reduced growth rates over earlier estimates were, “driven mainly by a lower server installed base than was earlier predicted rather than the efficiency improvements anticipated in the report to Congress.” In the NY Times article, Koomey goes on say, “Mostly because of the recession, but also because of a few changes in the way these facilities are designed and operated, data center electricity consumption is clearly much lower than what was expected…”

However, this reduction in growth is likely temporary, as our appetite continues to increase for internet access, streaming and cloud based services. Data centers will continue to consume growing amounts of electricity, more and more data centers will come on line, and data center managers will increasingly look to newer technologies to reduce their ever growing electricity bills. Additionally, when you consider that the estimated energy consumption of the US in 2010 was around 3,889 Billion kWh, 2% still represents close to 78 billion kWh. Clearly the trend is increased data consumption and with it increased energy consumption.

In light of these trends and despite the lower growth rates, many industry insiders are continuing to turn a critical eye toward cooling, recognizing both the inefficiencies of current approaches and the improvements possible through new technologies. The information contained herein is designed to assist the data center professional who, while keeping uptime and redundancy inviolate, must also balance growing demand for computing power with pressure to reduce energy consumption.

Issue: Understanding the Efficiency Metrics Best Practice: Adoption and use of PUE/DCiE

In furtherance of its mission, The Green Grid is focused on the following: defining meaningful, user-centric models and metrics; developing standards, measurement methods, processes and new technologies to improve data center performance against the defined metrics.

– The Green Grid

Measurements like watts per square foot, kilowatts per rack, and cubic feet per minute (CFM) are ingrained in data center dialogue. Until recently, no standard measurement existed for data center efficiency. Enter the Green Grid, a consortium promoting responsible energy use within critical facilities. The group has successfully introduced two new terms to the data center lexicon: Power Usage Effectiveness (PUE) and Data Center Infrastructure Efficiency (DCiE).

Power Usage Effectiveness (PUE)

PUE is derived by dividing the total incoming power by the IT equipment load. The total incoming power includes, in addition to the IT load, the data center’s electrical and mechanical support systems such as chillers, air conditioners, fans, and power delivery equipment. Lower results are better, as they indicate more incoming power is consumed by IT equipment instead of the intermediary, support equipment.

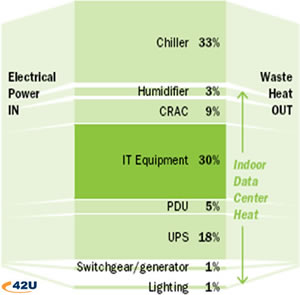

While it’s not the only consideration, cooling can be a major player in PUE measurement. Consider the following diagram, where the combination of the chiller, humidifier, and CRAC consume 45% of the total energy coming into the facility.

The Uptime Institute approximates an industry average PUE of 2.5. Though there are no tiers or rankings associated with the values, PUE allows facilities to benchmark, measure, and improve their efficiency over time. Companies with large-scale data center operations, like Google and Microsoft, have published their PUE. In 2008, Google had an average PUE of 1.21 across their six company data centers. Microsoft’s new Chicago facility, packed with data center containers, calculated an average annual PUE of 1.22.

The widespread adoption of PUE, left in the hands of marketing departments, leaves the door open for manipulation. Though the equation seems simple, there are many variables to consider, and users should always consider the context of these broadcasted measurements. At its core, however, the measurement encourages the benchmarking and improvement at the site level-the actions individual professionals can take to improve the efficiency of their facilities.

Data Center Infrastructure Efficiency (DCiE)

DCiE is simply the inverse of PUE-Total IT Power/Total Facility Power x 100%. DCiE presents a quick snapshot into the amount of energy consumed by the IT equipment. To examine the relationship between PUE and DCiE, “A DCiE value of 33% (equivalent to a PUE of 3.0) suggests that the IT equipment consumes 33% of the power in the data center.”

ASHRAE temperature and humidity recommendations:

The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) is an international technical society organized and a leading authority providing recommendations for data center cooling and humidity ranges. ASHRAE TC 9.9 recently released their “2008 ASHRAE Environmental Guidelines for Datacom Equipment” which expanded their recommended environmental envelope as follows:

| 2004 Version | 2008 Version | |

|---|---|---|

| Temperature | 20°C (68°F) to 25°C (77°F) | 18°C (64.4°F) to 27°C (80.6°F) |

| Humidity | 40% RH to 55% RH | 5.5°C DP (41.9°F) to 60% RH & 15°C DP (59°F DP) |

Conditions reflect air entering the Server & IT equipment

KW/Ton measures HVAC efficiency by comparing the energy consumption of the component in kW versus the amount of cooling provided in tons (12,000 BTU/hr). Like PUE, the lower the value, the more efficient the device. A kW/Ton rating is possible for all of the major components of a data center cooling system from the compressors to the server exhaust fans.

As data centers implement best practices and state-of-the-art technologies, they can expect the kW/Ton ratings to improve throughout the energy scheme.

Issue: Understanding the Cooling Components Best Practice: Next generation of cooling solutions

“Even in many organizations where IT and facilities staffs are cooperative, budgetary and measurement objectives are often separate and sometimes at cross purposes. Facilities typically pays for data center electricity, and IT often does not know how much electricity is being used in the data center or what it costs. In particular, cooling costs are almost always invisible to IT”

– Gartner

A data center professional is not necessarily an HVAC engineer. But he must be mechanically savvy and comprehend the entire energy scheme within the cooling infrastructure. Rising energy costs and a volatile economy will make the corporate hierarchy call for reductions in operational costs. And, based on the PUE discussion, we’ve seen that cooling infrastructure can have a major impact on OpEx.

A Brief Example of the Traditional Cooling System

Computer Room Air Conditioners (CRAC)

- Refrigerant-based (DX), installed within the data center floor and connected to outside condensing units.

- Moves air throughout the data center via fan system- delivers cool air to the servers, returns exhaust air from the room

Computer Room Air Handler (CRAH)

- Chilled water based, installed on data center floor and connected to outside chiller plant

- Moves air throughout the data center via fan system: delivers cool air to the servers, returns exhaust air from the room.

Humidifier

- Usually installed within CRAC / CRAH and replaces water loss before the air exits the A/C units. Also available in standalone units.

- Ensures that humidity levels fall within ASHRAE’s recommended range

Chiller

- The data center chiller produces chilled water via refrigeration process.

- Delivers chilled water via pumps to CRAH.

The principles of data center cooling (air delivery, movement, and heat rejection) are not complex, but these systems are. There are a number of smaller components like compressors, fans, and pumps, which shape the system’s operation and effectiveness.

Even within the “traditional” generation, nothing is one size fits all; cooling solutions are often dependent on factors like room layout, installation densities, and geographic location. 42U’s cooling technologies provide the data center manager with thorough product overviews, data on ROI, installation and commissioning, and objective product recommendation based strictly on user application, environment, and goals.

Airflow Management

Issue: Understanding Airflow Management Best Practice: Measurement, CFD Analysis, Containment

“In most cases, a fully developed air management strategy can produce significant and measurable economic benefits and, therefore, should be the starting point when implementing a data center energy savings program”

– The Green Grid

The cooling components are charged with creating and moving air on the data center floor. From there, the room itself must maintain separate climates – the cool air required by the servers and the hot air they exhaust. Without boundaries, the air paths mix, resulting in both economic and ecological consequences.

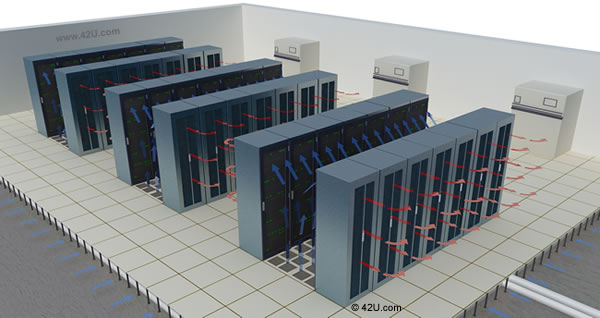

In the early 2000s, Robert Sullivan, an Uptime Institute scientist, advanced the concept of hot aisle/cold aisle, attempting to achieve air separation within the server room. The design, which aligns data center cabinets into alternating rows, endures in critical facilities throughout the world and is widely regarded as the first step in improving airflow management.

The arrangement, however, lacks precise air delivery and removal, leaving users a new set of challenges.

- Bypass air (conditioned air that does not reach computer equipment, escaping through cable cut-outs, holes under cabinets, misplaced perforated tiles or holes in the computer room perimeter walls) limits the precise delivery of cold air at the server intake.

- Hot air recirculation, where waste heat enters the cold aisle, ensures that the cooling infrastructure must throw colder air at the equipment to offset this mixing.

- Hot air contamination prohibits the air handlers from receiving the warmest possible exhaust air, rendering their operation less efficient.

- Hot spots may persist as a result of all of the above.

Users can address these inefficiencies through measurement, modeling, and analysis. These tools – including Computational Fluid Dynamics (CFD) analysis, air velocity and pressure gauging, provide a snapshot of your environment, pinpointing problem areas. Furthermore, real time measurement provides an immediate analysis of your data center environment. Often significant improvements are achieved with quick, inexpensive remedies, like blanking panels, brush strips and a modular type containment system (for more information click here).

Cold Aisle Containment

Cold Aisle Containment attempts to maximize the hot aisle/cold aisle arrangement by encasing the cold aisle with barriers made of metal, plastic or fiberglass. This approach eliminates the above challenges, ensuring the cold air stays at the server intake, while the air handlers receive the warmer exhaust error, improving their efficiency.

Hot Aisle Containment

In Hot Aisle Containment, the hot aisle is now enclosed, using the same barriers as its cold aisle counterpart. The design captures exhaust air via In-Row air conditioners, conditions it, and returns it to the cold aisle. AC efficiency is further improved as neither the hot exhaust air nor cold inlet air has far to travel.

High Density Cooling

Issue: Designing, Implementing, Managing High Density Best Practice: Next Generation Cooling Technology

The trend towards higher density cabinets and racks will continue unabated through 2012, increasing both the density of compute resources on the data center floor, and the density of both power and cooling required to support them.

– Gartner

The industry is exploring progressive cooling solutions because the current generation, discussed earlier, has proven insufficient and inflexible with increased computing requirements. (Chillers, for instance, are estimated to consume 33% of a facility’s total power in current layouts). In its report, the EPA christened some of the latest options as either “best practice” or “state-of the art” in its analysis and approximated gains of 70-80% in infrastructure efficiency through their use.

| Best Practice | State of the Art |

|---|---|

| Free Cooling | Direct Liquid Cooling |

| Air Side Economizers | Close-Coupled Cooling |

| Water Side Economizers | |

| – Evaporative Cooling | |

| – Dry Cooling |

Free Cooling

Free cooling brings Mother Nature into the data center. When the ambient temperature and humidity are favorable, an economizer system circumvents some of the cooling infrastructure and uses the outside air as a cooling mechanism. The economizers come in two forms.

An air side economizer uses the outside climate to cool the data center. This outside air is distributed to the cabinets via the existing air delivery system, except no mechanical activity is needed for heat rejection. Read more about Air side economizers here https://www.42u.com/cooling/air-side-economizers.htm

A water side economizer uses the outside air in conjunction with a chiller system. Instead of compressors, the outside air cools the water, which is then pumped to data center CRAHs. Water side economizers are marketed as either evaporative coolers or dry coolers.

Read more about water side economizers here https://www.42u.com/cooling/water-side-economizers.htm

Economizer use and ROI depend heavily on climate, meaning the data center manager must thoroughly review readings like wet bulb temperature, dry bulb temperature, and relative humidity for his location. For those with optimal environments (low night-time or seasonal temperatures) there will be compelling arguments for their use, especially their impact at the chiller level.

Close-Coupled Liquid Cooling

Close-coupled liquid cooling expands further on air management and containment. The use of water and the proximity of the heat transfer increases efficiencies and enables some close-coupled cooling designs to operate with elevated chilled water temperatures. Higher inlet water temperatures can reduce the energy needed for mechanical cooling and also maximize the amount of free cooling hours per year.

Elevated Chilled Water Temperatures

For facilities with a chiller infrastructure, the EPA recommends raising the water temperatures. Different sources estimate a traditional supply temperature between 42-45 degree F. A higher supply temperature yields higher efficiency for the chiller as it reduces energy consumption. Higher set points can also segue into water side economizers.

In a survey conducted by the Uptime Institute, enterprise data center managers responded that 39% of them expected that their data centers would run out of cooling capacity in the next 12-24 months and 21% claimed they would run out of cooling capacity in 12-60 months. The power required to cool IT equipment in your data center far exceeds the power required to run that equipment.

Overall power in the data center is fast reaching capacity as well, and an obvious area that needs to be addressed is implementing cooling best practices where ever possible and utilization of in-row cooling to address hot spots. In the same Uptime survey 42% of these data center managers expected to run out of power capacity within 12-24 months and another 23% claimed that they would run out of power capacity in 24-60 months. Greater attention to energy efficiency and consumption is critical.

To optimize the cooling in your data center, a good first step is an in-depth analysis of your current environment to gain a holistic understanding of your data center’s environment, increase awareness of your critical risk factors, benchmark performance metrics, and generate a punch list of opportunities for cooling improvement.

Heat is just one of the many factors that affects IT performance. Beat the heat with the scalable climate control solutions. From passive air to active liquid cooling; the best and most flexible cooling concepts are designed according to your requirements. Below are details on some of the latest energy efficient data center cooling solutions available.

Server Room Cooling Solutions

Up to 60 kW cooling output, with three cooling modules possible per equipment rack. Read more:

Effective Cooling Strategies For Today’s Datacenters ( https://www.42u.com/cooling-strategies.htm ), Liquid Cooling for Data Centers ( https://www.42u.com/liquid-cooling.htm ), and Rack Cooling ( https://www.42u.com/rack-cooling.htm ).

Computer Room Cooling Services:

Specialized engineering and educational services that enhance data center performance without additional capital investments. These services will help you to boost data center reliability, optimize your current cooling infrastructure, enable precision cooling to eliminate hot spots, dramatically reduce bypass airflow, assist with data center ASHRAE compliance and understand the dynamics of your data center, including cooling requirements and deficiencies

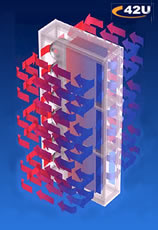

Rittal Liquid Cooling Package

Developed to remove high levels of waste heat from server enclosures, this high density cooling solution utilizes air/water heat exchangers providing uniform, effective and affordable cooling for servers and similar IT equipment. The special horizontal airflow of the Rittal LCP represents an adaptation of this widespread cooling principle, providing cooled air uniformly throughout the complete height of the enclosure.

The liquid cooling unit is a modular, upgradeable, and temperature-neutral cooling concept.

- Up to 30kW cooling output, with three cooling modules possible per equipment rack

- Controlled variable speed fan and water flow based on actual heat load generated in cabinet

- Constant temperature cold air provided at the front intake for optimized equipment use, hot air removed from rear

- Even air distribution along the entire height of the front 482.6 mm (19″) mounting angles

- Can be bayed between two 42U racks

- High energy efficiency in removing waste heat with no temperature impact in the room

Conclusion

“Greening” the data center often starts with the cooling infrastructure. This page addresses the basics, hoping to facilitate deeper discussions on current vs. future cooling technologies. From chillers to CRACs to economizers, there is no shortage of vendors who, armed with case studies, tables, and whitepapers, are championing the efficiency of their products. Our goal is to help the data center professional wade through mountains of vendor data to find the most germane, economical, and efficient products for his application

References

Cappuccio, D. (2008). Creating Energy-Efficient, Low-Cost, High Performance Data Centers. Gartner Data Center Conference, (p. 4). Las Vegas.

EPA. (2007, August 2). EPA Report to Congress on Server and Data Center Energy Efficiency. Retrieved January 5, 2009, from Energy Star: http://www.energystar.gov/ia/partners/prod_development/downloads/EPA_Report_Exec_Summary_Final.pdf

Koomey, Jonathan. (2011). Growth in Data center electricity use 2005 to 2010. Oakland, CA: Analytics Press. August 1. http://www.analyticspress.com/datacenters.html

Markoff, John. (2011, July 31). Data Centers’ Power Use Less Than Was Expected. Retrieved July 5, 2012, from The New York Times: http://www.nytimes.com/2011/08/01/technology/data-centers-using-less-power-than-forecast-report-says.html

Sullivan, R. (2002). Alternating Cold and Hot Aisles Provides More Reliable Cooling for Server Farms. Retrieved December 15, 2008, from Open Xtra: http://www.openxtra.co.uk/article/AlternColdnew.pdf

The Green Grid. (2008, October 21). Seven Strategies To Improve Data Center Cooling Efficiency. Retrieved December 18, 2008, from The Green Grid: http://www.thegreengrid.org/en/Global/Content/white-papers/Seven-Strategies-to-Cooling

The Green Grid. (2009). The Green Grid: Home. Retrieved January 5, 2009, from: http://www.thegreengrid.org/home